GPT Goes Haywire While Learning from Its Own Outputs

A collaborative investigation conducted by scholars from two US universities has scrutinized the behavior of leading commercial AI models. The results were startling and rather unsettling…

It appears that AI systems trained on their self-generated data or the data from other AI systems eventually start to "lose their grip." Consequently, the data acquired by users — images, texts, and more — fail to mirror reality.

This raises a question: Can this situation be remedied and, if so, how?

The Research

A team of specialists from Rice University and Stanford University has discovered certain limitations in AI systems like ChatGPT and Midjourney. Their research unveiled that after five iterations of data generation, these AI networks tend to spiral out of control.

The researchers have even coined a specific term for this phenomenon — MAD, which stands for Model Autophagy Disorder. In simple terms, this means that a model that is trained on AI data starts 'eat itself.' This is evident when the AI begins to disregard data found at the ends of the so-called Bell Curve (also known as the Gaussian function) and instead starts producing an averaged result.

To clarify, the Bell Curve is a bell-shaped graph where the majority of average values are centralized at the peak.

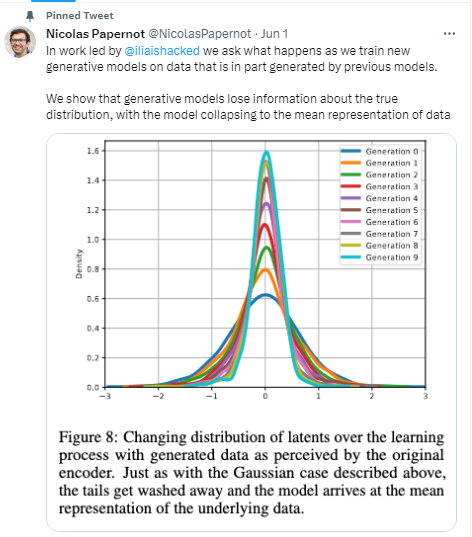

“In work… we ask what happens as we train new generative models on data that is in part generated by previous models.

We show that generative models lose information about the true distribution, with the model collapsing to the mean representation of data,” - tweeted Nicolas Papernot, a machine learning expert and member of the research group.

What did the research unveil? Source: Twitter

Researchers discovered that after approximately five "cycles" of AI information processing, the data on the peripheries of the curve vanishes entirely.

“They look at what happens when you train generative models on their own outputs…over and over again. Image models survive 5 iterations before weird stuff happens,” Tom Goldstein, a professor at UMD specializing in AI security, privacy, algorithmic bias, and the basics of machine learning, clarified. It's at this juncture that MAD, or Model Autophagy Disorder, begins to occur.

To substantiate his point, Goldstein demonstrated the transformation of images after continuous processing by artificial intelligence.

A Stumbling GPT Source: Twitter

The numbers, as seen in the above image, represent the number of iterations. It's clear to see how the AI gradually morphs a distinct individual's photo into a sort of averaged representation.

How Grave is the MAD Issue?

According to the researchers, the autophagy phenomenon is ubiquitous across all AI models and platforms. It's been detected in a multitude of auto-encoders and Large Language Models (LLMs), which are the exact models that enjoy the most popularity among users. Therefore, if your OpenAI's ChatGPT or Anthropic's AI Claude starts behaving erratically - producing incomprehensible texts or incorrect images - don't be taken aback.

At this point, it's crucial to recall the extent of AI systems' infiltration into corporate and public sectors as well as individual usage. Numerous operations, including the Internet of Things, data processing, and more, are heavily reliant on artificial intelligence. It's nearly impossible to enumerate all the spheres it's now entwined with.

Where to Find Psychiatrists Specializing in GPT?

One might think this problem is mostly overblown – just restrict the number of iterations and spare your AI from spiraling into MAD. The developers of upcoming iterations will certainly consider this phenomenon.

Yet, it's not as clear-cut as the researchers suggest. A plethora of unmarked AI products have surfaced online, and their numbers keep snowballing each day. Is it as simple as retraining these existing systems using traditional methods? For AI, that just triggers another "round," only aggravating the predicament further.

Relying on conventional methods to verify outcomes (such as text, images, noise reduction, etc.) via search engines won't cut it. Users will be left in the dark, unsure whether they're dealing with an original or AI-generated product.

Simultaneously, one can't simply "flood" AI systems with new, vetted original information. In the grand scheme of things, it will inevitably land on the fringes of the Bell curve and be dismissed. Hence, the priority for any new information entering the network becomes watermarks that can identify AI content.

Another option on the table is to considerably ramp up the amount of pertinent information across the internet. By boosting the frequency of results on the edges of the Bell Curve, the AI will gradually "draw in" accurate information towards the curve's apex (that is, the average results). While the edges still get trimmed, the AI would begin to harness this new, verified data. The researchers have dubbed this solution the method of altering weight coefficients.

But even here, new hurdles arise. How do we tweak these coefficients? Who would undertake such a gargantuan task? Once again, integrating new data sets will demand verifying the relevance of AI-generated results. It will also entail examining the system's updated settings and figuring out whether this approach has hit its mark.

And if you've stuck around and read this article till the end, you must be nearly going mad yourself, right?:)